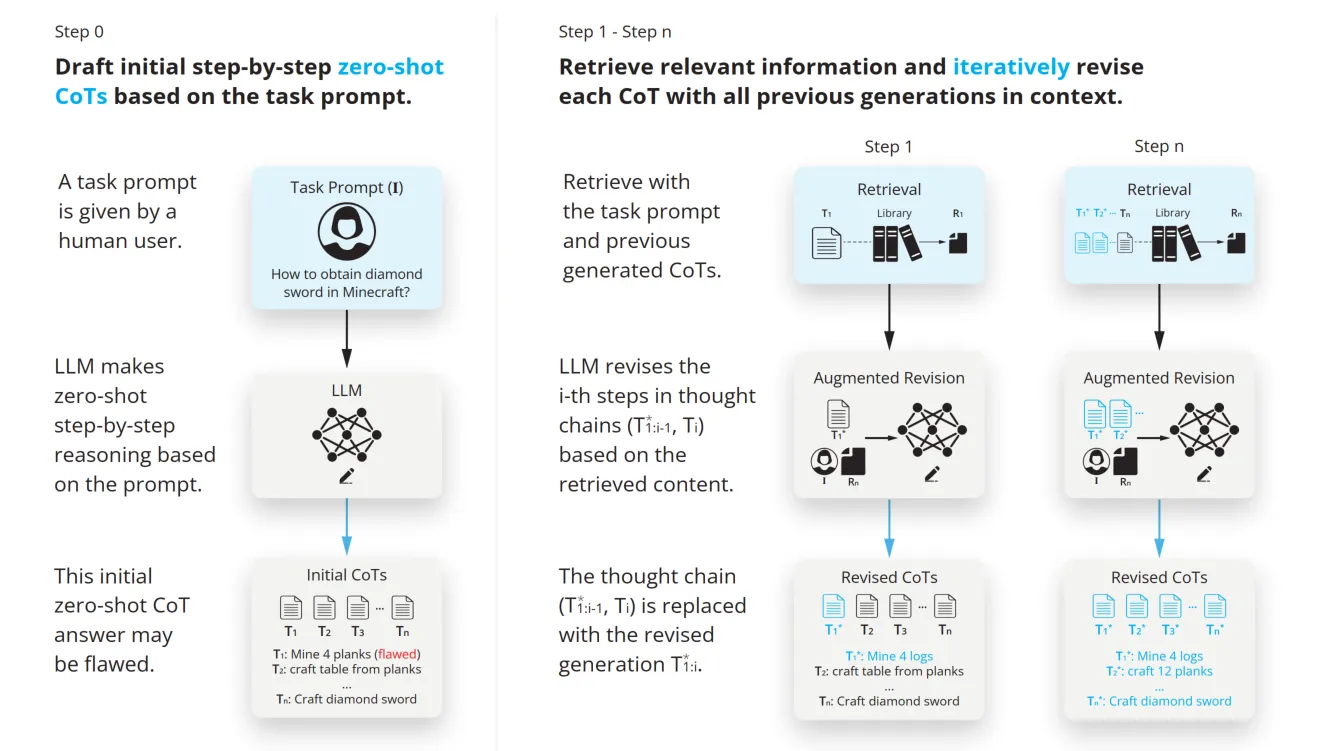

RAT, an integration of RAG into CoT. It revises the factual validity of CoT by implementing a retrieval-augmentation in every thinking step

Tips: The best way to learn about this is to check their source codes on github. Far better than staring at the abstract paper

SFT & CFT: Eliminating Hallucination in Reasoning

Problem: Factual correctness with LLM reasoning, especially for zero-shot CoT prompting (like “Let’s think step-by-step”) This especially hinders performance on long-horizon generation tasks requiring multi-step context-aware reasoning, like code generation.

[To be continued…]